Its time to talk about optimizing code, so was looking at Basilisk II code.

Its time to talk about optimizing code, so was looking at Basilisk II code.

One think I noticed was was I did have his platform depended read and write functions.

So I found where they where used, this where used whit UAE CPU memory header by read and write functions for CPU core, and some where else this where yet agin used some where else by some other function.

As it happens my education was electronics and programing on microprocessors, it was impotent back then to reduce code due slow MCU/MCU's, micro controllers and micro processor units.

We used to use inline assembler and macros. So now I'm going to show you the magic power of macros, and how it can make big difference.

So this code show what was happening in Basilisk II, you have two loops typical when you filling or doing some graphic operation, so you have a main routine calling do_sum and do_sum calling sum, but this can be changed it how it works, so what do you do macros.

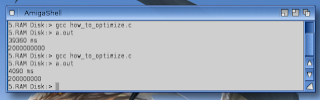

Now lets see what difference it made.

So we have 39360 ms (39.3 sec) before the change.

after the change we have 4090 ms (4 sec)

so what we find is that 39360/4090 = 9,62347. so the unoptimized code is 9.6 times slower or 962% slower.

so what is going on?

functions generate a lot of code, and they forces program to make jumps, jumps are considered expensive, often result in cache misses, next functions are designed to be isolated, variables from the previous code put on the stack to be later restored when you exit the function, function parameters are filled, to be letter read, by code inside the function, and when its all done it old variables are restored and the program exits the function.

So as you can understand its a lot of stuff that goes on when calling functions.

now when we rewrote it to use macros, the the macro is just replaced in the code before its compiled, the result is that that we only need to increment a variable by a value, and in the main function.